Overview of the Impact of Technology Enhanced Items

Large-scale assessments can be tedious and stressful for test takers, but test developers can change that by creating assessments that are engaging, effective and provide deeper insights into the higher-order thinking ability of students. In a previous article,[1] I outlined rationales for conducting large-scale student assessments and offered a high-level summary of the many benefits of conducting them in a digital format. This article expands upon how assessments can be enhanced to make them more engaging and effective for test takers. But first a little background.

Many years ago, when the Education Quality and Accountability Office (EQAO) was pilot testing computer-based assessment (CBA) in schools across the province, the early tests were made up of the typical multiple-choice, as well as short-answer and longer open-response items. Essentially, the questions that had been presented on paper were directly converted to be taken on a computer. Over the course of several months, EQAO staff visited schools to observe trial CBA administrations and engage in follow-up conversations with school staff and students to hear their reactions to the new approach. Even with these rudimentary computer-based tests, students were highly motivated. I recall an instance where a teacher pointed to a student in amazement. The boy, typically disengaged with school, was completely engrossed in the test-taking experience. Universally, students were excited to take an assessment on the computer, and many of them were disappointed when they learned we would not be returning the following day to administer assessments again!

Since those early days, the EQAO assessment modernization initiative has evolved significantly. With its move to online CBA, the Agency has been able to move away from the simple, unidimensional multiple-choice and openresponse items. With the transition came the opportunity to expand the range of item types[2], which are not only engaging for students, but can also enhance the effectiveness of assessments by allowing test takers to better demonstrate what they know and what they can do. Multi-media options such as maps, diagrams, photographs, animation, video, and audio, as well as technology-enhanced items (TEIs), including game-like simulations, further enhance engagement and can present students with real-world situations from which they can show their knowledge, skills and abilities.

Multilingual Formative Assessment for Student Success: Luxembourg

Many other jurisdictions have transitioned to digital assessments to make tests more efficient and effective and to enhance student engagement. In response to a growing heterogeneous, multilingual school population and a desire to promote digital awareness, Luxembourg’s Ministry of National Education, Children and Youth (MENJE) introduced a program called MathemaTIC in collaboration with their technology partner, Vretta. Digital education was meant to prepare students to learn, live and work in a 21st century global world, characterized by everincreasing use of new technologies, and to explore ways of using technology as an integral component of quality teaching and learning.

The program is a personal learning environment that provides 10- and 11- year-old students (Grades 5 and 6) with an engaging learning experience, including digital math resources in German, French, Portuguese, and English, to develop a strong foundation and confidence in mathematics. This integrated assessment system, adapted to individual student needs, offers students continuous, immediate, low stakes feedback and remedial support as they work through a personalized module map (as shown in the diagram) at their own pace[3].

MathemaTIC contains interactive, visual, audio, and video math items/tasks. These items/tasks are divided into curriculum learning modules[4], where each module begins with a 10-minute diagnostic assessment to determine the student’s knowledge and ability with regardto the content. The diagnostic assessment results determine the sequence of items/tasks presented to the learner (adaptive model). (Additional activities may also be proposed by the teacher.) The program may be used in groups/classrooms, or individual students may work through the program at their own pace independently. Following each learning module, the system presents a 10-minute summative assessment that the teacher can administer to the whole class. Tracking of student results and progress over time allows teachers, students and parents to understand where students are with respect to their learning pathways.

MathemaTIC has a wide variety of tools[5], including the standard eraser, highlighter, zoom in/out, calculator and notepad features. Examples of other useful tools include the following:

An extensive array of item types is offered for mathematics, in addition to the standard drag-and-drop, multiplechoice, and number- and open-input response items. Examples of other innovative formats include the following:

An assortment of item feedback types is available for mathematics. Examples of feedback types include the following:

It is also interesting to note that beginning with the 2024-25 school year, pre-primary children (3 to 5 years of age) will be introduced to a new program called MATHI. The country’s educators recognize that after pupils have reached the ages of 8/9, any deficits in math, acquired in the early years, are difficult to make up. MATHI, which will initially take the form of paper-based activities and resources, is intended to engender pupils’ positive attitudes toward math, while they are not afraid of/inhibited by the subject. The program will eventually be extended to 10-/11-year-old students, and digital applications will be gradually introduced.[6]

When Didactics Meet Data Science: France

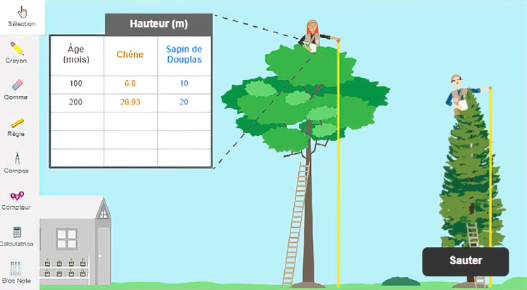

With the rising interest in TEIs, the Department of Evaluation, Prospective and Performance (DEPP) of the French Ministry of Education conducted a study[7] that included the analysis on the log data captured from the use of a TEI that was developed in collaboration between their technology partner, Vretta, and SCRIPT of the Luxembourg Ministry for the CEDRE assessment[8]. This was to identify how TEIs provide insights into students’ approaches to solving problems and their process strategies.

For their study, they chose the “Tree growth” TEI (as shown in diagram) with a sample of 3,000 students in Grade 9, where students enter the age of the tree in months in the table. A calculation tool returns the corresponding tree heights, and a graphing tool spots the points in the graph.

The result of the study showed that information on higher-order skills such as problem solving, devising a strategy and carrying out mathematical thinking, gathered from the student response log data, would help teachers better support students in their learning. This qualitative profile, based on students’ strategic pattern in solving TEIs, would add value to the national feedback that can be provided to teachers who would be able to differentiate teaching in the classroom according to the cognitive profile of each student.

Conclusion

Using an expanded range of item types (including multi-media options and TEIs), presents students with authentic, real-world situations from which educators and administrators can gain a deeper insight into numerous 21st century skills that are traditionally difficult (or not possible) to gather from standard item types (or paper-based testing). Additionally, the assessments can be developed such that they are engaging for the learner. In the case of MathemaTIC, indications have shown that students and teachers in Luxembourg are enthusiastic and are gaining value on understanding the higher-order skills of their students, which include critical thinking and the ability to evaluate and analyze real-world problems; this bodes well for the success of the project. In my view, the same can be said for the recently implemented assessment modernization projects in the Canadian provinces of British Columbia, Alberta, Ontario, and New Brunswick.

About the Author

Dr. Jones has extensive experience in the fields of large-scale educational assessment and program evaluation and has worked in the assessment and evaluation field for more than 35 years. Prior to founding RMJ Assessment, he held senior leadership positions with the Education Quality and Accountability Office (EQAO) in Ontario, as well as the Saskatchewan and British Columbia Ministries of Education. In these roles, he was responsible for initiatives related to student, program and curriculum evaluation; education quality indicators; school and school board improvement planning; school accreditation; and provincial, national and international testing.

Dr. Jones began his career as an educator at the elementary, secondary and post-secondary levels. Subsequently, he was a researcher and senior manager for an American-based multi-national corporation delivering consulting services in the Middle East.

Feel free to reach out to Rick at richard.jones@rmjassessment.com (or via LinkedIn) to inquire about best practices in large-scale assessment and/or program evaluation.